# = Before = # lora.mark_only_lora_as_trainable(model) # Not training any bias vectors # = After = # Training all bias vectors associated with modules we apply LoRA to lora. Make sure to modify the checkpoint accordingly if you choose to break up the layer. If one wishes to constrain the rank of the updates to the individual matrices, one has to either break it up into three separate matrices or use lora.MergedLinear. Some Transformer implementation uses a single nn.Linear for the projection matrices for query, key, and value. It's very likely that the optimal configuration varies for different model architectures and tasks. We encourage you to explore different configurations, such as adapting the embedding layer by replacing nn.Embedding with lora.Embedding and/or adapting the MLP layers. While we focus on a simple yet effect setup, namely adapting only the q and v projection in a Transformer, in our examples, LoRA can be apply to any subsets of pre-trained weights. load( 'ckpt_lora.pt'), strict = False) Now training can proceed as usual. load( 'ckpt_pretrained.pt'), strict = False) # Load the pretrained checkpoint first model. See how we use loralib in GPT-2, RoBERTa, and DeBERTa v2.examples/NLU/ contains an example implementation of LoRA in RoBERTa and DeBERTa using our package, which produces competitive results on the GLUE benchmark.examples/NLG/ contains an example implementation of LoRA in GPT-2 using our package, which can be used to reproduce the result in our paper.

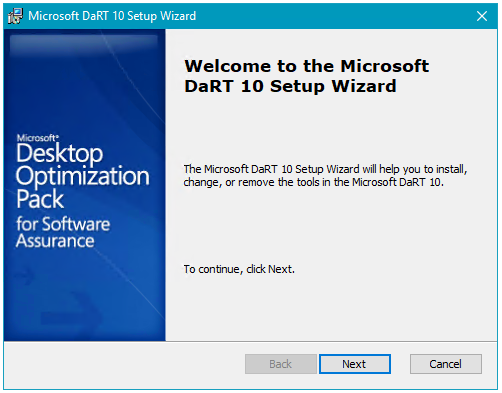

#Microsoft darts code

loralib/ contains the source code for the package loralib, which needs to be installed to run the examples we provide.There are several directories in this repo: (The initial release of this repo has been archived in the branch "snapshot-9-15-2021") Please follow the instructions in examples/NLG/ to reproduce our result. We include confidence intervals on results from our experiments. Non-LoRA baselines, except for adapter on GPT-2 large, are taken from Li and Liang (2021). We evaluated on E2E NLG Challenge, DART, and WebNLG:

#Microsoft darts full

On GPT-2, LoRA compares favorably to both full finetuning and other efficient tuning methods, such as adapter (Houlsby et al., 2019) and prefix tuning (Li and Liang, 2021). Please follow the instructions in examples/NLU/ to reproduce our results.

Note: You still need the original pre-trained checkpoint from Hugging Face to use the LoRA checkpoints.įine-tuning numbers are taken from Liu et al. Click the numbers below to download the RoBERTa and DeBERTa LoRA checkpoints. We obtain result comparable or superior to full finetuning on the GLUE benchmark using RoBERTa (Liu et al., 2019) base and large and DeBERTa (He et al., 2020) XXL 1.5B, while only training and storing a fraction of the parameters. LoRA also outperforms several other adaptation methods including adapter, prefix-tuning, and fine-tuning. This vastly reduces the storage requirement for large language models adapted to specific tasks and enables efficient task-switching during deployment all without introducing inference latency. LoRA reduces the number of trainable parameters by learning pairs of rank-decompostion matrices while freezing the original weights.

#Microsoft darts update

Update 2/2023: LoRA is now supported by the State-of-the-art Parameter-Efficient Fine-Tuning (PEFT) library by Hugging Face. Hu*, Yelong Shen*, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, Weizhu Chen See our paper for a detailed description of LoRA.Įdward J.

#Microsoft darts how to

This repo contains the source code of the Python package loralib and several examples of how to integrate it with PyTorch models, such as those in Hugging Face. (For the radio communication technique, see LoRa.) LoRA: Low-Rank Adaptation of Large Language Models

0 kommentar(er)

0 kommentar(er)